Subscribe now to get notified about IU Jharkhand journal updates!

Artificial Emotional Intelligence – A New skyline

Abstract :

Artificial Emotional intelligence or Affective computing is one of the latest and most discussed topics in the world of Artificial intelligence. According to Business Wire(2017) which is from the world famous business house Berkshire Hathaway forecasted that “ affective computing market was valued at US$6.947 billion in 2017 and is expected to grow at a CAGR of 42.63% to reach a market size of US$41.008 billion by the year 2022. Emotional Artificial Intelligence is incorporating emotion in artificial intelligence which means enabling a machine to recognize, interpret, Process and simulate the feeling of emotion or effect. This article explores the concept, research, progress and the impact of the of this new contemporary horizon in the business and the life as a whole.

Keywords :

Handicraft, Retailer, Artisans, customers etcIntroduction:-

“Emotions are what make us human. Make us real. The word 'emotion' stands for energy in motion. Be truthful about your emotions, and use your mind and emotions in your favour, not against’yourself.”- Robert T. Kiyosaki, “Rich Dad, Poor Dad” (2012)

Emotional intelligence separates us from the machines- is that still a right statement?The philosophy that the right to having emotions are only belongs to human or biological creature are being challenged by the scientists of the artificial intelligence in the recent days with the concept of ArtificialEmotional Intelligence.Artificial Emotional Intelligence as the name suggests is incorporating emotion in artificial intelligence that is developing a system which is able to recognize, interpret, process and simulate feeling of emotion or effect. Without any reservation it can be said that the integration of emotional intelligencewith artificial intelligence are in the verge of taking crucial turn in its way and going to be a transformational technology which will impact not only the industry & Job but also in the society and human life as a whole to a large extend.

Conceptualization-Emotion,Emotional Intelligence and Artificial Emotional Intelligence

Emotion : As per American Psychological Association (APA)"Emotion is complex reaction pattern, involving experiential, behavioural, and physiological elements, by which an individual attempt to deal with a personally significant matter or event". The specific quality of the emotion (e.g., fear, shame) is determined by the specific significance of the event" . Anger, Fear, happinessetc are emotions of different natures that can be generated depending on the significance of the event like danger, risk or hazard can generates the emotions of fear.Emotion involves feeling but diferent from feeling as emotion has obvious or implied

engagement with the world.Anger, Fear, Disgust, Happiness, Sadness, Surprise, contempt are universally accepted different types of emotions.There is long standing debate between psychologist and philosopher about the nature of the emotion that whether it is perception of psychological changes or they are states of cognitive judgment of the goal satisfaction . There are multiple theory of emotion which tries to explore and explain the emotion. As per Evolutionary Theory of Emotion by Darwin ((1872) Emotion evolved in the animals because of their survival and reproduction. Like people’s feeling of love and affection is actually to seek mates and and reproduce or and emotion of fear generates as it helps people to either fight or or flight in a situation of threat.

In the James-Lange theory of emotion James and Lange (1887)suggests that emotions arise as a consequence of physiological responses to events. Like if we are walking by a road and see a snake suddenly we may start trembling and our heart can start battling and can show many other psychological reactions like. In this scenario James-Lange theory suggests that interpret these physiological reactions and and it will lead to emotion fear and becoming afraid or scared

James-Lange View

seeing a snake -> Physiological reactions → Feeling of fear(emotion). In Cannon Bard theory of emotion byCannon and Bard(1927 )they disagrees

with James Lange theory and theory suggests that the stimulating events trigger feelings physical and psychological experience at the same time

and that one does not cause the other. So, with the example of seeing a snake will cause the racing heart and sweating at the same time with

emotion of fear. Cannon-Bard explains that role of thalamus in the brain as responsible for This.

Schachter and Singer’s theory by Schachter and Singer in (1962) or two factortheory combines both the on both the James-Lange theory and the Cannon-Bard theory of emotion. proposes that

people do deduce emotions on the basis of physiologicalresponses, but the crucial factor is the situation and the cognitive understanding by

which people use to tag that emotion. It accepts same physiological responses can produce different emotion in different situation.

As per the Cognitive Appraisal Theory by Lazarus (1984) cognitive evaluation is the key which is involved in the generation of every emotion This theory

says two discrete forms of cognitive appraisal has to occur for an individual to feel stress in response to an event which Lazarus termed as

"primary appraisal" and "secondary appraisal .It says thinking is a must before experiencing emotion and the order of events are first involves

a stimulus, followed by thought which then leads to the simultaneous experience of emotion with physical response .

The facial-feedback theory of emotions suggests that facial expressions aredeeply related to experience of emotions. This theory suggests that emotions are directly knotted

with the changes in facial expression through facial muscles. Lot of research are going on in this area in recent days. As example, people who

are enforced to smile pleasurablyin a socialevent will have a better time at the event compare to the case if they do not express that or behave

indifferent or neutral facial expression. Psychological research has classified six facial expressions which correspond to distinct universal

emotions: disgust, sadness, happiness,fear,anger, surprise Black and Yacoob(1995). Clinical benefits from manipulating facial muscles have been

confirmed by several studies by Finzi and Rosenthal (2014); Maggid et al.( 2014); Woolmer et al. ( 2012).

In the Indian philosophical texts and scriptures emotions are not seen as an separate component but seen as an integrated component of personality arising from the interaction of

ahamkara with the external world.

According to Jain(1994)“emotions remained something to be transcended in order to achieve the ultimate goal

of life.” Emotions are explained with the view of the framework of atman (true self). and ahamkara (Ego).

According to Indian concept emotion generates from desires which comes from the experience of interaction of the ego with external world.

Sage Bharata ((5th century) given the concept of Rasa or Rasa theory in the context of drama and theatre. Aesthetic mood is central to this approach

to understanding emotional experiences as dealt in Natyashastra mentioned by Bharathamunicommentary by Abhinavagupta(11th century). In this ancient

text Sage Bharat mentioned about the eight major emotions or bhavas. They are sringara (love), hasya (comic), karuna (pathos), raudra (furious),

vira(heroic), bhayanaka (horror), bibhasta (odious), adbhuta (marvel). The corresponding emotions are rati (erotic), hasa (mirth), soka (sorrow),

krodha(anger), utsaha (energy), bhaya (fear), jugupsa (disgust), vismaya(astonishment)

Emotional Intelligence

Wayne Payne(1985) in his doctoral thesis used the term "Emotional Intelligence" for the first time. Beasley(1987) first used the term "Emotional quotient" in an article published in Mensa Magazine. However, the term got much attention in 1990 with the Landmark articlepublished by psychologists Saloveyand Mayer((1990) in the journal Imagination, Cognition, and Personality.

The emotional intelligence is one's ability to control his own emotion, understand and interpret and responding appropriately to the emotion of others consists of four components mainly Perceiving emotions, Reasoning with emotions, understanding emotions, Managing emotions.

According to Salovey and Mayer (1990), the four branches of their model are "arranged from more basic psychological processes to higher, more psychologically integrated processes. For example, the lowest level branch concerns the (relatively) simple abilities of perceiving and expressing emotion. In contrast, the highest-level branch concerns the conscious, reflective regulation of emotion."

Emotional Quotient is a measure of a person's level of Emotional Intelligence which refers to a person's capacity to perceive, control, evaluate, and express emotions. It is now boiling topic in areas extending from business management to education.In recent days emotional intelligence is a must have quality of that is a in your completeachievement in life.

Artificial Emotional Intelligence

“Siri doesn’t yet have the intelligence of a dog,”

“Even your dog knows when you’re getting frustrated

with it,” This was sated by Picard(2017) who is Director Affective Computing Research at the Massachusetts Institute of Technology (MIT) Media Lab

One can be frustrated or infuriated by hearing with the way the digital assistant Siri, Alexa or Cortana gives the tone-deaf answer to your question

without any emotional indulgence in it Picard (2004) rightly said IBM’s Deep Blue supercomputer beat Grandmaster and World

Champion Gary Kasparov at Chess without feeling any strain or anxiety during the game, and at the same time without any joy at its success, indeed without feeling anything at all. Nobodyconcerned that it would feel overwhelmed or get flustered.

Emotions or emotional intelligence is a quality which distinguish humans from animals. As discussed providinga computer the ability to emulate human behaviour which means beside computer can think and reason (Artificial Intelligence) it should also be able to understand and demonstrate emotions is the subject of artificial emotional intelligence. Things are changing and changing very fast. Lots of research are going on in this new area of artificial intelligence.It is expected that by the year 2020, artificial emotional intelligence is deemed to be a complete technological reality .

Artificial Emotional Intelligence : Initiation, Researchand Insight

Minsky (1987) renowned Professor Marvin Minsky of MIT who is considered to be an founder of artificial intelligenceproposed that emotional ability should be incorporated into computers in “The Society of Mind” and then published “The Emotion Machine: Commonsense Thinking, Artificial Intelligence, and the Future of the Human Mind”

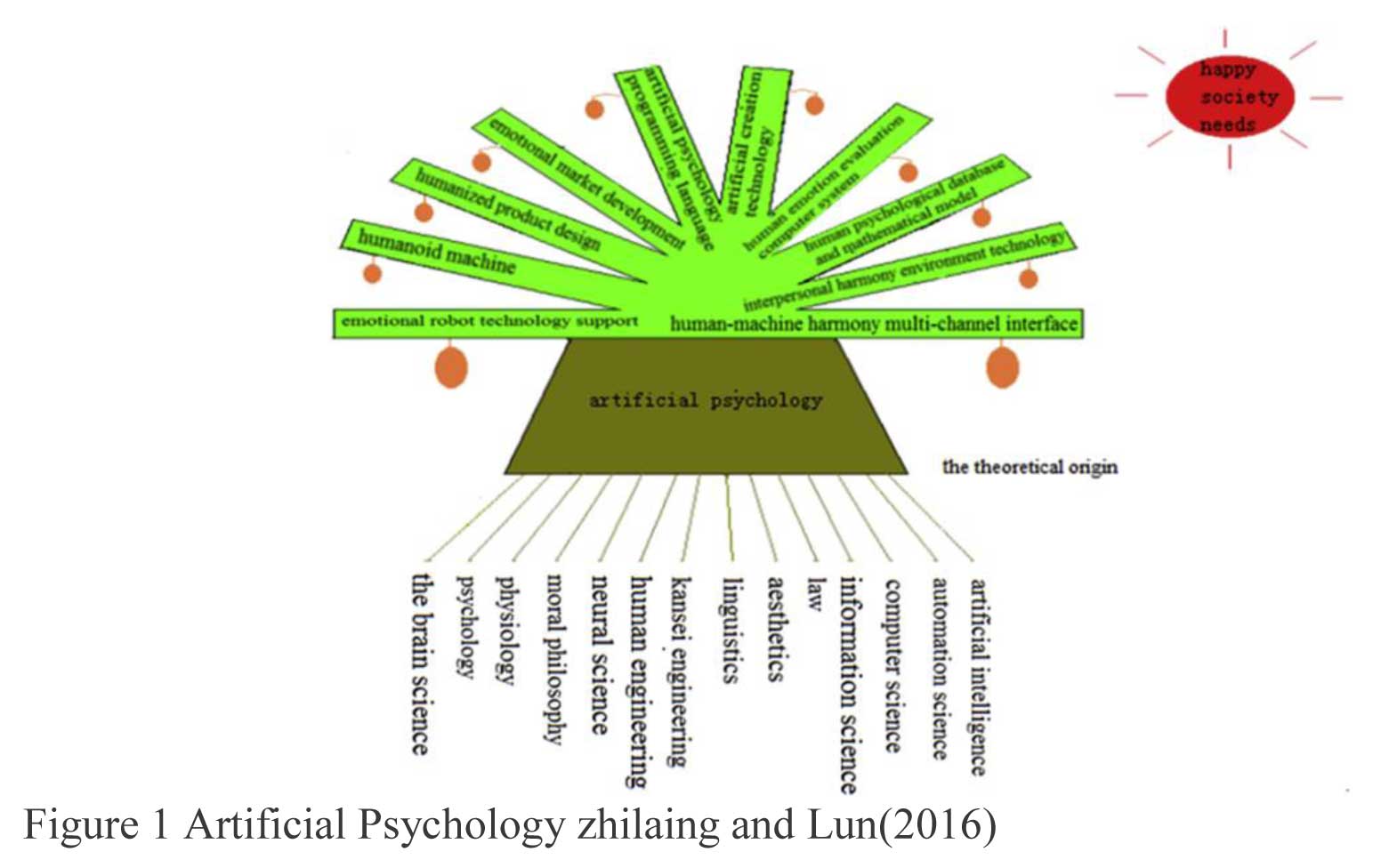

From then, the interest to incorporate the ability ofunderstanding and expressing emotions in machine fetching the interest of the scientist and technology company. After 1990s only thought of including emotion in artificial intelligence started taking much seriousness.At the same time frame, number of researchers started working to build machine which can cause, perceive, handle, comprehend and express emotions [8]. Another significant development in this domain is Kanseiengineering which is the technology combines emotion and engineering flaunted as a “happy and comfortable” science and the outcome or product devolved with this technology is called “Kansei commodities.” In the year 1996, , the Japanese Ministry of Education started giving support the initiatives “emotional information and psychology” with the purpose to study emotional information not only from the the psychological perspective but also from to the cross-disciplinary perspective of information science, psychology and other significant related disciplines by national funding([6],[5]).Picard (1997) proposed a framework for construct machines with emotional intelligence as afterward, number of other researchers have built machines that are capable reason, sense, manage, and express emotion.At the same time, without any doubt it can be said Japan has led the world in artificial emotion technology. It made considerable progress in the industrialization of Kansei engineering. research, and production of personal robot. Robotics took a new height with the famous “Sony's Aibo robotic dog” which made USD 1 billion profit with 6 million copy sold( [[5],[12])Other famous product in this direction are like QRIO and SDR- 4X emotional robots.Nowadays with the advancement of the research of artificial emotional intelligence machines are more efficiently able to o sense and recognize human emotion,and to reply with better skills and to avoid escalation of the negative feelings and also helping people to assessing the abilities and behaviours that can contribute emotional intelligence. This skillare changing the wayapplications interact with people – agents will show consideration of human feelings andtake steps to be less infuriating [3].Affective Computing or Artificial emotional intelligence is a cross disciplinary combination of number of fields like the brain science, Psychology, Physiology ,Information science, computer science, law , moral philosophy ,aesthetic science as below diagram verynicely represents this.

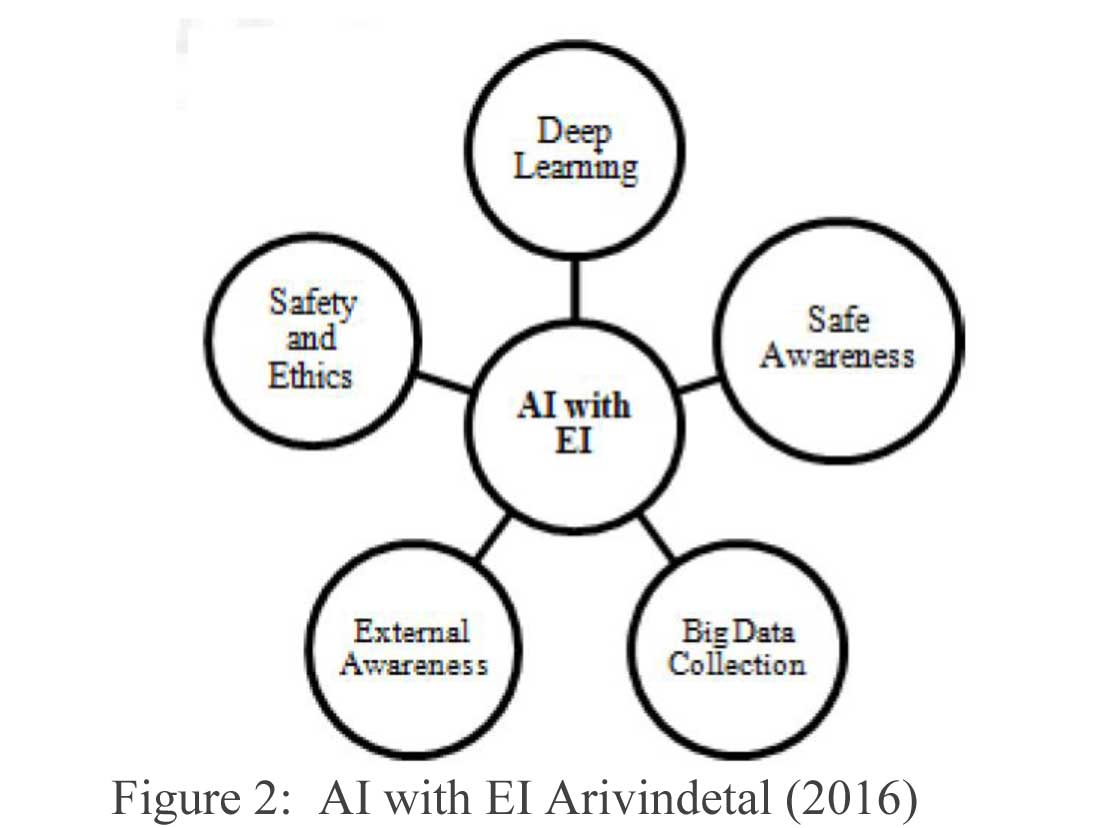

Microsoft research[22]clearly explains that the goal is to create systems with artificial emotional intelligence (AEI) by educate systems to think, reason, and communicate more naturally. using machine learning to classify and model multi-modal emotional data streams as input for better human-computer interaction (HCI) and communication and it brings together experts in deep learning, reinforcement learning, natural language processing (NLP), psychology, design, computational linguistics and more work on a wide variety of problems, including emotional conversational agents and novel HCI(human computer interaction)systems. They are building upon one of the largest sets of multimodal emotion data and a rich platform of tools with deep neural networks trained through techniques from supervised, unsupervised, and reinforcement learning. This is a unique opportunity to change the way systems learn to understand and communicate with humans more naturally, and hence, intelligently [13]

Humans use a lot of non-verbal cues, gesture, body language, such as facial expressions, and tone of voice, toexpress their emotions. So Artificial Emotional intelligence should be able senseemotion, from multiple channels like the way humans do[25].

In today’s business and technology world data has become a capital and critically significant for the success of the business. No doubt “Big data” is one of the key contributor for the advancement of artificial intelligence and emotional artificial emotional advantages[36] As per Gartner’s definition, circa(2001) "Big data" is high-volume, -velocity and -variety information assets that demand cost-effective, innovativeforms of information processing for enhanced insight and decision making. as the three Vs arriving in increasing volumes and with ever-higher velocity. This is known as the three Vs.

- Volume which refers to amount of data Big data, both structured and un structured.

- Velocity which refers to rate at which data is received.

- Varity which refers different types of data which may be of traditional structured data, semi structured or unstructured data like audio, text, video which requires metadata to support their analysis.

With the rise and support of the Bigdata,advance learning algorithm are being developed which can capture, analyse and store people’s behaviour like desires, emotional triggers,biases,behaviours on the basis of the communication, friends and the context of culture. This way treasure of data and information collected from the billions of people by the technology giants like Facebook, Google,Microsoft, Twitter and othersare being used to develop the devices and technology in the context of artificial intelligence and artificial emotional intelligence. AI can leverage one’s whole online history, which in most cases is more information than anybody can remember about any of their friends

Artificial Emotional Intelligence In Action And Business

According to Zimmermann (2017), The vice president of research at Gartner, stated: “By 2022, your personal device will know more about your emotional state than your own family.”[24] and Harvard Business Review Just two months later, a landmark study from the University of Ohio claimed that their algorithm was now better at detecting emotions than people[22]Huang et. Al (2004) worked on domain of earning in the emotional intelligence mainly about Architecture of learning companion agent with facial expression of emotion. The realization of the transition between emotion space in the emotion module and facial expression space in the facial expression module was the most important in this research[11].Daviet et.al. (2005) developed model about decision support system in a a disaster environment which combines emotional behaviour, personality trait with the external event which can affect the in an agent[13].Wang et. Al (2009) proposed the concept of affective learning like a mobile agent emotional e-learning system that able distinguishes and analyses the different emotional state of the student student’s emotional state based on learning style., so a virtual teacher’s avatar can regulate learning psychology learning psychology of students[12].Tsai et. Al(2010)worked on incorporating several aspects in an educational environment for a student like virtual agents, speech recognition and ,emotion inference and. Though the analysis of speech capture which was successful to indicate detect the emotional state of the student and to reply with appropriate dialogs in agent student interaction[14].A fuzzy-logic based system aimed to generate emotions for a virtual seller or avatar presented by Herrera et. al.(2010)[19].An intelligent agent-based system was presented by Rafighi et. Al(2010) which is about evaluating the stateof horror emotion sense when it faces an infuriating event[15].Salichset. al.(2012)and Schaatet. al.(2013)presented an mode on traffic driving process. This is about the approach and role of artificial emotion in decision making process by the virtual and physical driver the autonomous agent. Biologically decision-makingsystem is very much dependenton the l motivations and emotions([16],[17]).An efficient recommendation system based on emotional web browsing agent presented by Karim et. Al(2012) which is able to effectively recommend websites based on user’s emotion by taking the environment input accurately[25].In their research Mirjana et. al. (2015) proposed MAS distributed environment utilizing agents for efficient processing of emotional information. Efforts are being made in the in a very important field called multi-agent systems (MAS) to exploit logical methods for providing a rigorous specification of how emotions should be implemented in an artificial agent. Recent research work in this area has been focused on enabling software agents to detect emotions via verbal, non-verbal, and textual cues express emotions through speech and gestures. Kleber (2018) in Harvard Business Review talks about the current applications for Emotional AI and according to him they are of three categories.

Systems that use emotional analysis to adjust their response

In these types of application, the AI service recognizes emotions and influences this information into decision making process. though, the service’s output is completely emotion-free.

Conversational IVRs (interactive voice response) and chatbots are this type of application as example in customer service this system able to detect the emotion of customer and if angry it can use different escalation system other than normal. this system route customers to the right service flow faster and more accurately when factoring in emotions. For example, when the system detects a user to be angry, they are routed to a different escalation flow, or to a human. Ford,Affectiva’s Automotive AI, are in a race get emotionally intelligent car driver software to detect the emotional state of the driver and if in angry or lacking attention or heightened state it takes control of the vehicle or stops it to prevent any further harm.The security sector also using Emotion AI to detect strained or annoyed people even may be monitoring posts in social media.

Systems that provide a targeted emotional analysis for learningpurposes :

As example a “rationalizer” bracelet developed by Philips teamed up with a Dutch bank. This device which is to help the trader by stopping him to take irrational decision. It monitors pulse level of the wearer and identify where to understand whether the wearer is in a heightened emotional state and make his aware to stop taking crazy impulsive decision Brain Power’s smart glasses from google which is a device what sees and hears special feedback geared to the by using facial expression .on the user’s own emotional state. It can help people with autism better understand emotions.

Systems that mimic and ultimately replace human-to- human interactions

Assistant,Microsoft’s XiaoIce or Amazon’s Alexa fall into this category

Another very significant development in this field is Emo Spark [21] which is first AI home console anAndroid powered Wi-Fi/Bluetooth cube that is created with the objective to achieve a true and meaningful understanding between technology andthe human emotional spectrum.

Emo Spark usesthe conversion, visual media with an music, TV, smart phone,tablet, computer, gaming To collect data with an concise intelligence and over time creates a customized emotional profile graph(EPG) which collects and measures a exclusive emotional input from the user.

The Cube connected to Google, Wikipedia and other free resources and is able to answer and project answers in over 39 million topics. Emo SPARK can feel an infinite variety in the emotional spectrum which is based on 8 primary human emotions, Joy, Sadness, Trust, Disgust, Fear, Anger, Surprise and Anticipation. With the use and interaction it learn and collect with the response and comments from the user . As the cube learns and measures it creates a emotion profile of a user that can make the user emotionally stable and happy.[21]According to Forbes (2017) Affectiva’s Emotion AI technology is already in used by 1,400 brands worldwide, including CBS, MARS and Kellogg’s, many of whom use it tounderstand and explore the emotional impact of a advertisement video and reading the facial expression by asking viewers switching the camera. Captured facial images are analyzed by deep learning to classify them according to the feelings. [27]According to the BusinessWire(2017) from the one of the most renownedinvestment company Berkshire Hathaway forecastedthat affective computing going to reach a market size of US$41.008 billion by the year 2022 which was market was valued at US$6.947 billion in 2017 at a CAGR of 42.63% . with the Microsoft, Google, Apple, Amazon and others are in race.[28] The thriving growth of machine learning and artificial intelligence (AI) are exiting and also scary at the same time as all transformational technology. It is exiting because it has the ability to improve the quality of life as in all the example given above. But it is scary because it has social and personal implication specially in the job market. It is unquestionable that some of the operations like gathering data, analyze the data, Interpret the results, determine a recommended course of action, Implement the course of action machine with AI may be much better with the machine compare to human in number of cases. For example, of financial analyst, a machine with knowledgebase and learning possibly can accurately analyze gigantic volume of data and forecast much better that a human financial analyst in much shorter time. . Self-driven car along with artificial emotional intelligence are being threats to the job of thousands of commercial drivers. Even Doctor’s job are not out of the risk as with this artificial intelligence, artificial emotional intelligence a machine learning amachine cananalyzemuch more cases and patient’s history, and scan though huge volume of related information to make a accurate diagnosis, and determine and plan a course of treatment without getting annoyed. Same is true for the other number of popular occupations profession like teacher, customer support executive of even councilor with a much-improved level of artificial emotional intelligence.

Joshi (2107) refers to a study [32] by Oxford University/Deloittepoints to that there are possibilities that new job will be created to complement the. This has important insinuations for HR and its Workforce for Planning Agenda, encouraging HR to look at the design of jobs and roles, especially that require good judgment, creativity and emotional intelligence these are skill skills that cannot be replicated completely by an automated machine. It is also needed to understand that how we can work with the robot rather only what robot cannon do as this will redefine our work and work environment. It will require the skill and mindset to partnering and collaborating with the new team member in a work environment which will be a robot or a machine with artificial intelligence and artificial emotional intelligence.

Conclusion

Mantas (2018) in the World Economic Forumstated

“The hardest part of a digital transformation is us, the 'analog' humans”

There are real challenges and they are not only technical but also social, psychological and ethical too. At the same time for better or worse, the hype around artificial emotional intelligence is real. Researchers of Artificial emotional intelligence is getting thriving success in providing abilities to sense and recognize human emotion in to a machine. Without doubt it will be going be transformative an improve the quality of life. However, it has some crucial concerns like risk of losing job, genuine risk of losing privacy of emotion as emotion is considered to be private.There is also the risk of emotional mistreatment if emotion wronglydetected by the machine or program. However, we should not fight with progress of technology but should work on the appropriate risk management of the potential issues and negative impacts. There is no doubt that in the future machineswill continue to be improvedand sophisticated in their ability incorporate emotional intelligence will help us to calm, relieved.Machine will be able to to get our trust and rapport, and to help us to mitigate larger variety of situations[3]. But the technology is only as good as its programmer.Marr (2017) “Bias is another issue … bias can be built into the data, there are algorithms that detect not just your emotions but your age, gender, ethnicity – we are very thoughtful and careful about how we use that data.”[27]Brynjolfsson warned as these technologies should be generalised and should be fit for for all people, not only to the subsection of the population used for training. As example identifying emotions in an African American face hard for a machine that’s trained withAsian Indian faces. There are also some of the gestures or voice modulations which are distinctly different across different culture.[39]Itsimportant to remember is that to use it thoughtfully. The purpose is not just to build machines that have emotional intelligence, but to build tools that should help people enhance their own skillsfor managing emotions, both in themselves and in others. As Kaliouby(2019)rightly said on the context of thoughtfulintegration to the technology in a way

“The paradigm is not human versus machine — it's really machine augmenting human,” she said. “It's human plus machine.

References:

- Herrera,V., Miguel, R.,Schez,J. J.,&Morcillo, C. (2010).Using an Emotional Intelligent Agent to support customers searches interactively in emarketplaces.22nd International Conference on Tools Artificial Intelligence, 1082-3409.

- Mirjana,I., Zoran,B.,Radovanović,V.,Kurbalija, Weihui,D.,Costin,B.,Mihaela,C., Ninković,S., &Mitrović,D.(2015). Emotional Agents – State of the Art andApplications, Computer Science and InformationSystems 12(4),1121–1148.

- Picard,R.W. (2004).Toward Machines with Emotional Intelligence Rosalind.IEEE International Conference on Informatics in Control, Automation and Robotics, 25-28

- Picard, R. W. (1997). Affective Computing.M.I.T Media Laboratory Perceptual Computing Section ,Technical Report No. 321

- Wang,z.,Xie.,&Tingl.U(2016).Research progress of artificial psychology and artificial emotion in China.ScienceDirectCAAI Transactions on Intelligence Technology,1(4), 355-365

- H. Zhou., H.W. Yang., &Cai,H. (2000). Changes of emotional speech based on SVR.J. Northwest Norm.R., &al Univ, 1 (45) 62-66.

- .Kumar,A.,Singh,R.,&Chandra,R.(2016).Emotional Intelligence for Artificial Intelligence. International Journal of Science and Research,79(57),2319-7064

- Sood, S.O. (2008): Emotional computation in artificialintelligence education. In AAAI Artificial Intelligence Education Colloquium, pp.74-78. (2008).

- Kumar,A.,Singh,R.,Chandra,R.(2017).Emotional Intelligence for Artificial Intelligence: A Review ,International Journal of Science and Research , ISSN (Online): 2319-7064

- Krakovsky,M.(2018).Artificial(Emotional)Intelligence.Communications of the ACM, 61 (4)

- .Huang,C. C., Kuo, R., Chang, M., &Heh, J. S. (2004).Foundation Analysis of Emotion Model for Designing Learning Companion Agent. IEEE International Conference on Advanced Learning,ISBN 0-7695-2181-9

- Wang, Z., Qiao, X.,&Xie, Y. (2009).An Emotional Intelligent E-learning System Based on Mobile Agent Technology. IEEE International Conference on ComputerEngineering and Technology, 51-54

- Daviet, S., Desmier, H., Briand, H., Guillet,F.,&Philippe,V.(2005).A system of emotional agents for decision-support. IEEE/WIC/ACM International Conference onIntelligent Agent Technology, 711-717,

- Tsai, I. H., Lin, K. H. C., Sun, R. T., Fang, R. Y.,Wang, J. F., Chen, Y. Y., Huang, C. C.,&Li, J. S. (2010).Application of Educational Emotion Inference via Speech and Agent Interaction. Third IEEE International Conference on Digital Game and Intelligent Toy Enhanced Learning, 129-133

- Rafighi, M., Layeghi, K., Riahi, M. M (2010): A Model Of Intelligent Agent System Using Horror Emotion.ICEMT International Conference on Education andManagement Technology, 558-559.

- Schaat, S., Doblhammer, K., Wendt, A., Gelbard, F.,Herret, L., &Bruckner, D. (2013).A Psychoanalytically Inspired Motivational and Emotional System forAutonomous Agents. IECON-39th Conference of theIndustrial Electronics Society, 6648-6653.

- Salichs, M. A., Malfaz, M. (2011).A New Approach to Modeling Emotions and Their Use on a Decision-Making System for Artificial Agents.IEEE Transactions on Affective Computing, 3(1)

- Karim,R., Hossain, A., Jeong, B.-S., Choi, H.-J.(2012): An Intelligent and Emotional Web Browsing Agent. IEEE International Conference on Information Science and Applications (ICISA), 2162-9048

- Herrera,V. Miguel, R., Castro-Schez, J. J.,&Glez-Morcillo, C. (2010). Using an Emotional Intelligent Agent to support customers searches interactively in emarketplaces.IEEEInternational Conference on Tools with Artificial Intelligence, 1082-3409.

- Jain,u.(1994).Socio-Cultural Construction of Emotions.Psychology and Developing Societies,Psychology and Developing Societies,6(2) Venkatesh,P.,Kodipalli,A.,&BanuMathi.I,PriyaDharsini.G.(2017).EMOSPARK-A Revolution in Human Emotion Through Artificial Intelligence, International Journal of Innovative Research in Computer and Communication Engineering, 5(3), 2278-8727

Web Resources

- https://www.microsoft.com/en-us/research/project/artificial-emotional-intelligence/

- https://hbr.org/2018/07/3-ways-ai-is-getting-more-emotional

- https://www.gartner.com/smarterwithgartner/emotion-ai-will-personalize-interactions/

- https://blog.affectiva.com/emotion-ai-101-all-about-emotion-detection-and-affectivas-emotion-metrics

- https://www.weforum.org/agenda/2018/09/why-artificial-intelligence-is-learning-emotional-intelligence/

- https://www.forbes.com/sites/bernardmarr/2017/12/15/the-next-frontier-of-artificial-intelligence-building-machines-that-read-your-emotions/

- https://www.businesswire.com/news/home/20170927005549/en/Global-Affective-Computing-Market-2017-2022---Market

- https://hbr.org/2017/02/the-rise-of-ai-makes-emotional-intelligence-more-important

- https://www.changeboard.com/article-details/15693/ai-vs-ei-the-new-challenge-for-hr/

- https://www.allerin.com/blog/artificial-emotional-intelligence-the-future-of-ai

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3705675/

- Jain U. Socio-cultural construction of emotions. Psychol Dev Soc. 1994;6:152–67.

- https://voicetechpodcast.com/articles/development/analytics/artificial-emotional-intelligence-how-good-is-it/

- https://machinelearnings.co/the-rise-of-emotionally-intelligent-ai-fb9a814a630e

- https://plato.stanford.edu/entries/concept-emotion-india/

- https://voicetechpodcast.com/articles/development/analytics/artificial-emotional-intelligence-how-good-is-it/

- https://mitsloan.mit.edu/ideas-made-to-matter/emotion-ai-explained

- https://www.oracle.com/in/big-data/guide/what-is-big-data.html

- https://www.emospark.com

- http://www.sony.net/Products/aibo/