Subscribe now to get notified about IU Jharkhand journal updates!

Adoption of AI in transforming Hearing Aid

Abstract :

Artificial Intelligence (AI) relates to the ability of computers to handle complex situations around us through advanced complex solving approach. It is used in everyday work of life. We can see its application in as robotic vacuum cleaners or running shoes in consumer durable. Its application can be seen in advanced aeronautic navigation systems as well as in medical computer imaging systems. Many of the scholars have tried to provide a clear distinction between advanced, complex solutions possible in computers and the way the human concepts of "consciousness" and "thinking" (McCarthy, 2003).

Digital technology fulfills major requirements and challenges of the h hearing aid .In the year 1996 digital technology was first seen its application in Hearing aid (HA). There are many situations in which uni-dimensional prediction do not capture the true complexity of sound. A better technique does confirm that signal processing achieves the desired outcome.AI Hearing Aids generally uses Machine Learning (ML) or Deep Neural Network (DNN) for sound synchronization and amplification.

This article tries to understand the application of AI in Hearing Aids in the last two decades. In noisy challenging environment when it is difficult to hear AI application have been identified. The improvement in sound processing, reduction in background noise, masking the unwanted sounds are some of the effects it has introduced. Further, the development of technology does brings in the data log which provides the preference pattern and changes accordingly. The article tries to revolve all the development in AI in its future application in HA.

Keywords :

Artificial Intelligence (AI), Hearing Aid, Digital Sound Processing (DSP), Machine Learning (ML), Deep Learning (DL), Neural NetworkIntroduction

Artificial Intelligence (AI) is now part of our everyday environment. AI works in the background every time we access our Facebook account, search in Google, click on Amazon, or book a trip online. But its application is not restricted to his only. The activities we do from morning are some were down the line has its connection with AI. May be even we are not aware of it. Artificial Intelligence (AI) has been studied for ages but still it is the most elusive subjects in Computer Science.

Hearing aid technology has developed quite fast over the past 10 years. The evolution of Digital signal processing (DSP) into hearing aids in 1996 was a landmark feat. It helped the advanced signal processing algorithms implementation. The hearing aids included features such as directional microphones which provides verifiable improvements to speech understanding in noise. Very few people predicted such advancement in the hearing aid industry at the beginning of the 1990s. A large group considered that multiband wide dynamic range compression (WDRC) would be the de facto standard processing for hearing impairment. A research study published before 1990 indicated WDRC was unnecessary as well as detrimental.2-4 Directional microphones was tried in hearing aids to incorporate noise reduction without much success.

Hearing Aid and its work Processes

Hearing Aids are used by persons suffering from hearing disability. Although they are not deaf but they do not get the adequate amplification as a normal person does. Further, the speech is distorted and it becomes difficult to understand (Elizabeth et al., 2002).

The hearing aid quality depends on the signal processing. The modern digital technology focus is on concepts underlying the processing .The processing can be classified into three broad areas:

- Processing to implement frequency as well as level- dependent amplification which restores the audibility. Further it provide acceptable loudness which is based on the hearing threshold of the individual (generally the audiogram but sometimes considering the results of loudness scaling) as well as preferences of the individual.

- Sound cleaning, it refers to partial eradication of impulse sounds along with reduction of acoustic feedback. Noise reduction can be achieved both by single-microphone along with multiple-microphone algorithms, only the latter shown signs of improvement in intelligibility.

- Environment classification generally consist of automatically controlled settings of hearing aid in different listening environment.

Decades of research study have failed to reach a consensus as to the "optimal" compression speed for improvement in speech recognition. Some studies have indicated good performance with fast-acting WDRC, others with slow-acting WDRC (Souza, 2002). The key problem which needs to be addressed is hearing loss do not just decrease the overall level of neural activity. It also profoundly distorts the patterns of activity in manner that the brain no longer recognizes. Hearing loss isn't just a loss of amplification and compression. (Neurosci T, 2018).

Hearing Aid users does accept hearing aid when the following criteria does arises

- Suffer from moderate to severe hearing impairment.

- Self-reported hearing-problem or participation restrictions;

- Aged

- consider there to be more benefits than barriers to amplification; and

- Perceive their significant improvement in hearing rehabilitation. A barrier to help-seeking for hearing impairment.

It needs to understand that hearing performance generated through hearing aid does provides artificial hearing. There is a subjective level of satisfaction from the user's point of view. The Oxford Advanced Dictionary (2000), mentions good feeling that one has achieved something further when something that one wished to happen does happen. Similarly, Oliver (1997) mentions satisfaction as pleasurable fulfillment as to how a consumer feels that his or her needs, desires, and goals have been fulfilled. Satisfaction is an emotional pleasurable experience which confirms something right has happened providing a driving force to sustain the effort that yields this feeling.

Artificial Intelligence and its workings

Artificial intelligence is the simulation of human intelligence processed by machines. Specific applications of AI include expert systems, natural language processing, speech recognition and vision. John McCarthy in 2004 mentions the science and engineering of making intelligent machines. It refers to intelligent computer programs. It is similar to task of using computers which understand human intelligence, but AI do not have to restrict itself to methods which are biologically observable.

Prior to this definition the birth of the artificial intelligence conversation was denoted by Alan Turing's seminal work, in his work " Computing Machinery and Intelligence ". In the paper Turing mentions "Can machines think?". He offers a test, famously known as the "Turing Test", where a human interrogator tries to distinguish between a computer and human text response. The test has undergone much changes since its publication. Still it is considered as an important part of the history of AI as well as an ongoing concept within philosophy. It utilizes ideas around linguistics.

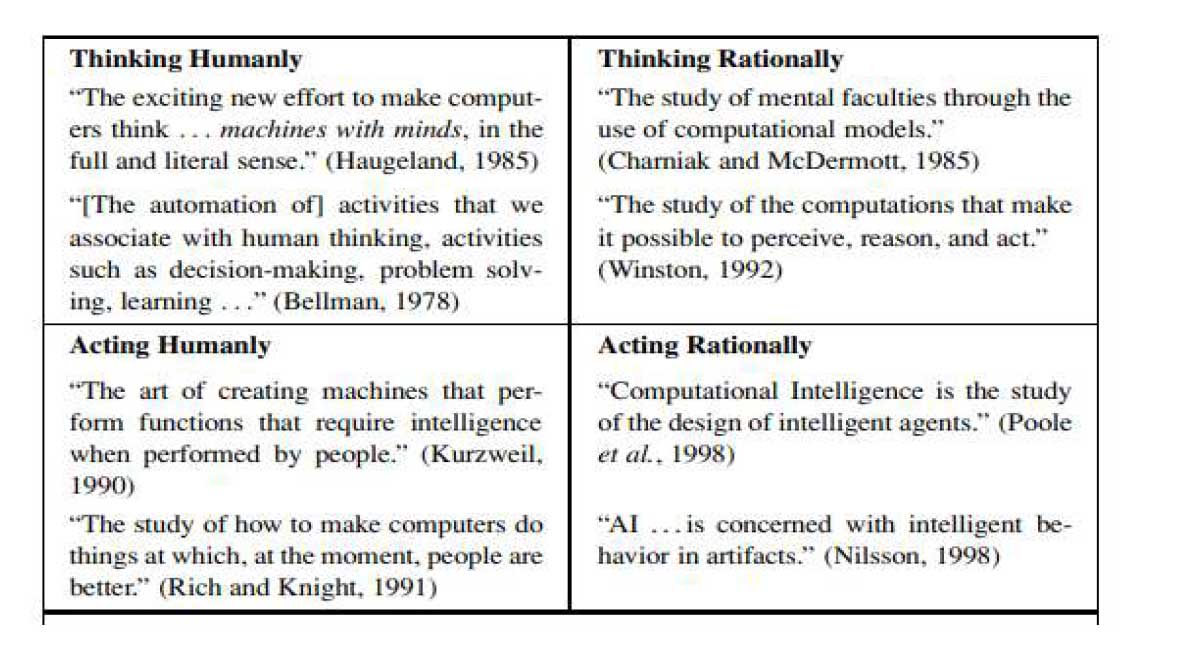

Source: - Artificial Intelligence: A Modern Approach (3rd Edition), Stuart Russell and Peter Norvig, Editors

Areas of Artificial Intelligence

- Language understanding: The ability to "understand" and respond to the natural language, Speech Understanding

- Semantic Information Processing (Computational Linguistics)

- Question Answering

- Information Retrieval

- Language Translation

B. Learning and Adaptive systems: The ability to adapt behavior based on previous experience. To develop rules concerning the world based on experience.

C. Problem solving: Ability to formulate problem in suitable representation. Its plan for its solution further to know when new information is required and obtaining it.

- Resolution-Based Theorem Proving

- Interactive solving problems

- Automatic Program drafting

- Heuristic Search

D. Perception (visual): The ability to analyze a scene by relating to an internal model representing the perceiving organism's "knowledge of the world." The result of this analysis is structured set of relationships within entities in the scene.

- Pattern Recognition and understanding

- Scene Analysis

E. Modeling: The ability to develop an internal representation. To set of transformation rules which helps to predict the behavior relationship between some set of real-world objects or entities.

- The Representation Problem for Problem Solving

- Modeling Natural Systems (Economic, Sociological, Biological etc.)

- Hobot World Modeling (Perceptual as well as Functional Representations)

F. Robots: A combination of the above abilities with the ability to move over terrain as well as manipulate objects.

- Exploration

- Transportation

- Industrial Automation (e.g., Process Control, Executive Tasks)

- Security

- Other (Agriculture, Fishing, Sanitation, Construction, etc.)

G. Games: The ability to accept a formal set of rules for games such as Chess, Checkers, etc., to translate these rules into a representation or structure which allows problem-solving and learning abilities. This can be used in reaching an adequate level of performance.

Application of Artificial Intelligence in Hearing Aid

Digital technology fulfilled the major expectations when it was released in 1996. The digital technology is indeed present in hearing aids across a broad price range.

Importantly the use of AI has been inculcated in HA in such a manner that it has shown marked difference.

The key to advances in hearing aid performance comes from algorithm along with software development. There is a digital hardware, it maximize the capabilities of digital technology as it applies to human auditory perceptions. AI is the vehicle with new levels of patient benefit can be achieved in digital hearing aids.

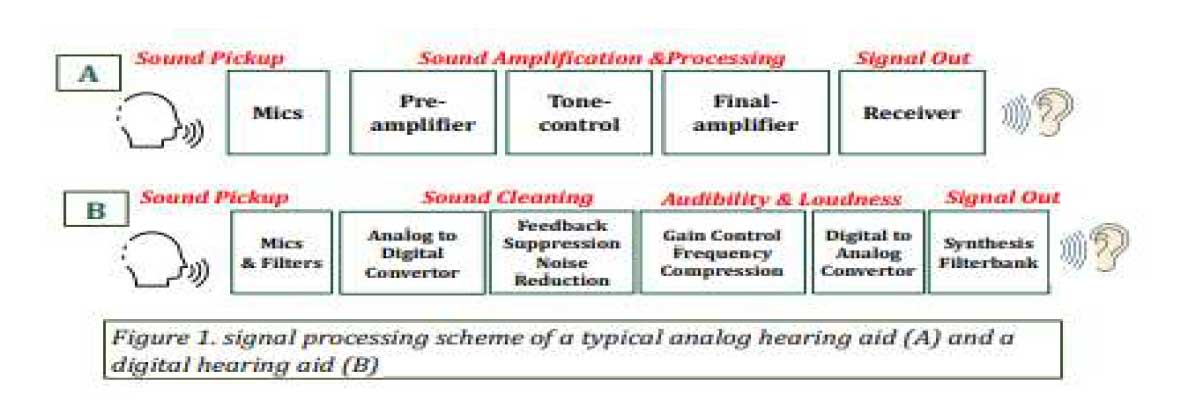

The general signal processing scheme of an analog HA and a modern digital HA is shown

Source: - shuang-qi-poster-october-2022

Three revolutions in HAs are occurring through signal processing technologies during the past several decades it starts with nonlinear amplification, digital signal processing then to wireless.

Artificial intelligence (AI) has received significant attention recently and has been applied to multiple fields. Its needs special mention machine intelligence is the next revolution in HA (Zhang et al., 2016). Recent research regarding AI in HA has included:

- Machine learning (ML),

- Deep learning (DL) and

- Neural network (NN)

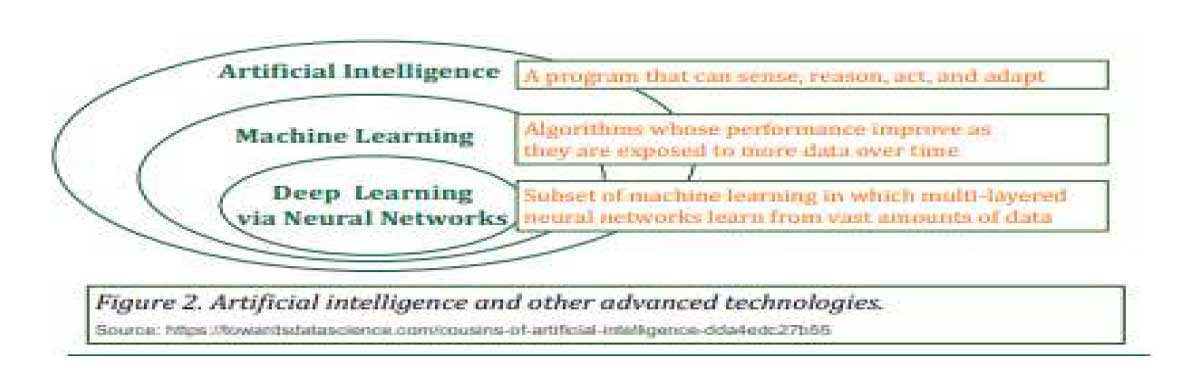

This is shown in Figure 2. These processes are designed in such a manner to mimic the complex neural mechanisms such as nonlinear transmission of the sounds.

Digital wireless technology transmits fidelity signal than analog systems. In a typical analog system, the signal quality decreases. Further the receiver is from the transmitter. Digital signals preserve their fidelity with greater consistency. The quality remains consistently good up to some limiting distance, beyond it the quality drops dramatically. This becomes the usability distance, which users can be sure that the sound heard will be uncorrupted by distortion noise. The ability of digital wireless is part due to error correction coding .It is a technique which detects errors occur in the wireless data and corrects them. Digital coding schemes also are more resistant from interference to electromagnetic signals with interference from other devices wirelessly transmitting in the area.

Conclusion

AI applied to HA technologies is still rapidly growing. It is highly dependent on development of digital processing in wearable devices). The benefits of AI-based HAs are present in the noise reduction as well as speech enhancement. In situations without much noise AI may not show additional benefits. But in noisy challenging environment AI application can be seen to a great extent.

As digital hearing aid technology develop, new innovations becomes difficult to develop. Engineering approaches have driven applications until now. Future advances will need collaboration of many fields namely psychoacoustics, signal processing, and clinical audiology.

Concepts of connectivity along with individuality will drive the new applications. As the interaction within hearing aid processing along with complex auditory, cognitive function becomes better understood. New concepts in digital hearing aid technology will be developed to account for this type of interactions. As DSP chips will become more advanced in capability, improvements compared to current algorithms. New algorithms will be created with inspiration from such sources as auditory models and other audio industries. Patient benefit will drive all of this development, resulting evidence-based practice becomes more popular. This alone will cause engineering development to work much closely with audiology and auditory science as new diagnostic measures as well as validation procedures are developed in conjunction with new digital technology.

References

- Strom KE. The HR 2006 Dispenser Survey. Hear Rev.2006;13:16-39

- Mermelstein P, Yasheng Q. Nonlinear filtering of the LPC residual for noise suppression and speech quality enhancement. Presented at: IEEE Workshop on Speech Coding for Telecommunications Proceedings: Back to Basics in Attacking Fundamental Problems in Speech Coding; Pocono Manor, Pa; 1997.

- Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. J AcoustSoc Am. 1995; 97:593-608.

- De Gennaro S, Braida LD, Durlach NI. Multi-channel syllabic compression for severely impaired listeners. J Rehabil Res Dev. 1986; 23:17-24.

- Lippmann RP, Braida LD, Durlach NI. Study of multichannel amplitude compression and linear amplification for persons with sensor neural hearing loss. J AcoustSocAm. 1981; 69:524-534.

- Plomp R. The negative effect of amplitude compression in multichannel hearing aids in the light of the modulation-transfer function. J AcoustSoc Am. 1988; 83:2322-2327.

- Kochkin S. MarkeTrak VII: Customer satisfaction with hearing instruments in the digital age. Hear J.2005; 58:30-42.

- Christensen CM. The Innovator's Dilemma. Cambridge, MA: Harvard Business School Press; 1997.

- Beecher F. A vision of the future: A ‘concept hearing aid ‘with Bluetooth wireless technology. Hear J. 2000; 53:40-44.

- Yeldener S, Rieser JH. A background noise reduction technique based on sinusoidal speech coding systems. Presented at: IEEE-International Conference on Acoustics, Speech, and Signal Processing; Istanbul, Turkey; 2000.

- Ross M. Tele-coils are about more than telephones. Hear J. 2006; 59:24-28.

- Yanz JL, Roberts R, Colburn T. The ongoing evolution of Bluetooth in hearing care. Hear Rev. 2006. In press.

- Myers DG. In a looped America, hearing aids would be twice as valuable. Hear J. 2006; 59:17-23.

- Brandenburg K, Bosi M. Overview of MPEG audio: current and future standards for low bit-rate audio coding. Aud Eng. Soc. 1997; 45:4-21.

- Yanz JL. The future of wireless devices in hearing care: a technology that promises to transform the hearing industry. Hear Rev. 2006; 13:18-20.

- Van Veen BD, Buckley KM. Beam forming: a versatile approach to spatial filtering. IEEE ASSP Magazine.1988; 5:4-24.

- Besing J, Koehnke J, Zurek P, Kawakyu K, Lister J. Aided and unaided performance on a clinical test of sound localization. J AcoustSoc Am. 1999; 105:1025.

- Desloge J, Rabinowitz W, Zurek P. Microphone-array hearing aids with binaural output Part I: Fixed-processing systems. IEEE Trans Speech Audio Proc.1997; 5:529-542.

- Van den Bogaert T, Klasen TJ, Moonen M, VanDeun L, Wouters J. Horizontal localization with bilateral hearing aids: without is better than with. J AcoustSoc Am.2006; 119:515-526.

- Klasen TJ, Moonen M, Van den Bogaert T, Wouters J.